A Neural Network in 11 lines…of VBA!

Machine Learning is hard, and Deep Learning is possibly even harder to grasp, matrices, dot product, optimization function, ReLU, etc… enough to make a math graduate scratch his/her head sometimes, that’s why in my opinion creating something simple should always be the first step and never be underestimated, doesn’t matter how naive that is, make something which works and build from that.

In my slow & humble learning path, one of the best source and an extremely recommended read for whoever wants to jump into this field is the following amazingly well-written article: iamtrask.github.io/2015/07/12/basic-python-network

It manages to explain in great details how to build a very simple & concise Neural Network in Python by analysing line-by-line the inner working of such a marvellous construct (The following code comes from the aforementioned article):

That’s beautiful and concise, but then I started thinking: “I need to replicate this myself otherwise no chance I’ll get it completely”, I’ve rewritten it in Python, but come on, that’s easy! So I looked around and, weirdly enough, the language I’m most fluent with other than Python and SQL is… VBA! Yep, the loved and hated script behind our clunky Excel spreadsheet, single-threaded, with poor memory management, almost Object oriented (check out this article for more info: stackoverflow.com/questions/31858094/is-vba-an-oop-language-and-does-it-support-polymorphism) that’s the furthest you could possibly go in terms of choosing an optimal language for Machine Learning, but who cares, let’s do it anyway! Moreover, A fully functional neural network running natively in vanilla Excel? In my favourite spreadsheet? Come on, we all have dreamed that, if you didn’t you don’t know how powerful a NN can be.

The challenge:

Life is either a daring adventure or nothing at all. — Helen Keller

One of the major drawbacks of the project is that I’ll have to code most of the useful libraries and functions entirely from scratch, let’s write down what I need to do first and what issues I’m expecting:

- I’ll need to store and manipulate matrices, you see that

np.array([[0,1,1,0]])? That’s a numpy array, extremely useful to sum, multiply, dot-product, transpose and generally deal with matrices, I’ll need something similar first. - Once I’ve got the Numpy array I’ll need to teach VBA what to deal with that in a meaningful way, I decided to create a new class only for that purpose to keep the code concise, it’ll do the job.

- Performance. That’s not much I can do in this regard, floating-point operations work better on a GPU but to my knowledge, VBA doesn’t support that, moreover, I have to rely on a single thread… this is gonna be slow but hey, the goal is the journey, right?

- Conciseness. No chance I’ll be able to summarise the entire code in 11 lines as I’m missing most of the building blocks, but I’ll do my best to have the final network rely on those blocks and keep the final code as close to the original as possible.

- No external Add-ins, no references, all pure and simple VBA code, I want to paste my classes in a new workbook and have them to be fully functional right out of the box.

1. The clsTensor:

Probably someone working at Google is already shouting at me, but I decided to name my class according to the wonderfully written TensorFlow library (if you don’t know what that is, go take a look NOW, this is what ML dreams are made of, quite literally in some cases: tensorflow.org/).

Each tensor will contain an array inside storing all the values, let’s look at the constructor:

What’s going on here? Not much really, just creating an array called

tensor, if the randomize is true I fill it with random numbers between -1 and 1, I’ll use that for the NN weights, plus a small check to make sure the user is not having a tensor with 0 rows/columns.

I’ll also need to update the values when the network trains,

change_tensorwill do just fine as I’ll update the entire array in one go, in my experience this is faster than updating the values one-by-one.

What if I want to see what’s going on inside the tensor? Sure I can use Excel “locals” window, but I freaking love Python’s way of displaying lists, so I’m gonna imitate just that in the terminal, a 5x2

clsTensor will be printed as such: [[0.3891, 0.3935],[0.0400,0.0356],[0.1765,0.1732],[0.9800,0.9804],[0.9958, 0.9958]], sweet.

Since we are working in Excel, let’s make good use of that and have the

clsTensor read directly from the spreadsheet, this will be super-useful later on, the code above does just that, but wait… there’s a caveat! Excel’s way to read from a range into an Array makes the array starts from 1 and we all know how much we HATE those off-by-one bugs, thus I create a temporary tensor with same dimensions but 0-indexed and then copy all the files. Does it add overhead? Yes. But is not much, and it saves a lot of hassle later down the line.

And that’s it, our

clsTensor is done, I could add much more but for now is fine, now let’s teach this little guy how to interact with his mates.2. The clsOperations:

This class will contain all the operations you can perform with the

clsTensorobject, I won’t explain here all the perks of Object Oriented Programming, but generally is a good way to keep everything contained, this class will provide the non linear function, as well as the usual operation usually performed by NumPy (if you don’t know what that is, check out www.numpy.org/)

I’ve established a set of rules to keep consistency:

- Each function accepts

clsTensorand returnsclsTensor. - Structure must be as similar as possible to avoid confusion.

- I clear the memory as soon as I can, not a big deal in this case but is good practice.

The above mimics the

nonlin function in the Python code, accepts a clsTensor, applies the sigmoid function (en.wikipedia.org/wiki/Sigmoid_function) or the derivative and spits out the result as a new clsTensor.

I will have to sum, subtract and multiply my Tensors, right? The functions are pretty straightforward, they all accept 2

clsTensorand output the result and they use the change_tensor to modify the value of the output, luckily array manipulation is fairly quick in VBA and 2 for-loop will do just fine

Now I’ll need my Transpose but that’s simple as well:

3. The NN module:

Now I have all the building blocks, I’ve created my own small Neural Network library! Now let’s create a module and build the actual network there, but wait! The network shown at the beginning of this article has just one input and one output layer, that’s not enough, after this work I want to do DEEEEP stuff! So I decided to move to the 2nd network shown in the original Python article, the one which has 1 input, 1 hidden and 1 output layer, and to play with that a little bit, here’s my final result:

I love how the code resembles the original Python script, let’s check the details, I won’t explaining the inner workings as they are much better explained in the original article:

- I need to initialise all the variables before-hand, one line each, for some reason VBA initialise them as “variant” if you have 2 or more in one line and that’s a problem because the outputs of all the functions expect a

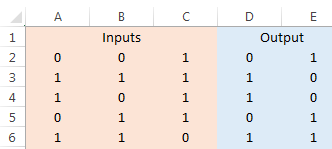

clsTensor . Dim op as clsOperationis the closest I’ve got toimport numpy as np, love it.- I grab the input & output values directly from the sheet (line 20–21, see below image for the values), I’ve extended the output to 2 columns because I want to test that in case I need to do classification in the future (NNs use one-hot-encoding of categorical features for that).

4. I extract the dimensions of the hidden layer directly from the tensors (line 22–23).

5. I’ve cranked the hidden layer up to 10 nodes (line 24), totally unnecessary overkill but I want to benchmark how this is performing.

6. I initialise the weights randomly (line 27–28), they are gonna be updated during the training.

7. For-loop with 10k iterations (line 30) that’s the proper testing phase.

8. Forward propagation (line 32–34), here I update

l1andl2which are my layer in-between and my output, respectively.

9. Calculate the error (line 37)

10. Use the derivate (line 40, the

True in the function) to calculate the slope of the sigmoid and backpropagate (line 43) to get the error in the hidden layer, then, use that to understand the slope in the previous layer (line 46).

This is not the best place to explain how backpropagation works as it is a VERY complex topic and the real core of how Neural Networks learn, there is a great series of video by 3Blue1Brown explaining this in great detail, go check this out as it is mesmerizing: youtube.com/watch?v=aircAruvnKk

11. Update/correct the weights with the new values (line 49–50)

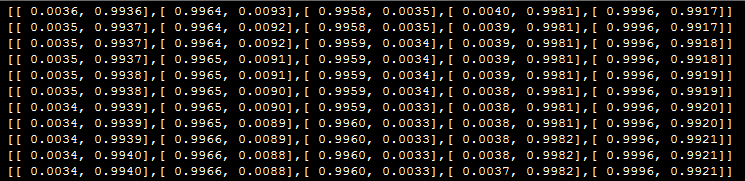

12. Every 100 iteration give me your final output (line 52)

4. The result:

This is how it looks after 10k iterations, let me remind you the target vector is [[0,1],[1,0],[1,0],[0,1],[1,1]]

Oh my god, it works!!! The entire process runs in about 3 seconds, I know that’s ridiculous compared to Python standards, but that’s expected, I’m not using low-level C libraries and is running on a laptop, and most importantly I’ve learnt a lot in the interim.

5. Next steps:

My primary concern, as this will probably never be useful in production as it is too slow, is: what can I learn more? A few ideas I want to implement:

- One-hot encoding, so I can take a range of values and adapt it quickly in a suitable format for the NN.

- Learning rate, currently is fixed at 1, I may want to change that for better optimization.

- Better / different activation functions, sigmoid is fine, but there are better choices.

- Scoring, right now I’m comparing the output layer with my result, but would be interesting to take the R-squared error and log that into the console.

- Wrapper class, same as Keras for TensorFlow, would be amazing to have a manager generating the NN automatically according to the input and output ranges and a set of parameters.

Perhaps I’ll cover that in a follow-up post, for now, thanks for reading and happy VBA coding!

Update:

As I’ve been asked for the original file, please feel free to download it from here: https://drive.google.com/file/d/1XAOXheaOGE3dk8YoAaHrhkLbIbox85LP/view?usp=sharing

As I’ve been asked for the original file, please feel free to download it from here: https://drive.google.com/file/d/1XAOXheaOGE3dk8YoAaHrhkLbIbox85LP/view?usp=sharing

0 comentarios:

Publicar un comentario